Sandrine Voros, DR2 INSERM

TIMC Laboratory

Computer Assited Medical Interventions (CAMI) team

Computer Assited Medical Interventions (CAMI) team

"Augmented" laparoscopic surgery

My objective is to conceive an "augmented" laparoscopic surgery system:

- Able to localize in space the endoscope and the surgical instruments

- That enriches the environment from which the surgeon will base his surgical decisions, by allowing him to "see beyond the visible": this can be done by developing new morphologic and functional per-operative imaging tools (that will for instance highlight the prostatic capsule or malignant cells)

- That fuses the different imaging modalities in a single referential

- That helps the surgeon to take the "good" decisions: it is commonly admitted that the learning curve for laparoscopic surgery is long (>100 surgeries). Studies also demonstrated that the learning curve for a good cancer control after a radical prostatectomy is of ~250 interventions.

Localization and tracking of surgical instruments based on image analysis

We have developed an innovative method for the 3D localization of surgical instruments based on laparoscopic images (see for instance this article). This method works at ~12Hz and was validated through several testbench experimentations. It is currently being enhanced to statisfy the more demanding constraints of experiments on anatomical specimen in more realistic conditions.

The method was also used to automatically move the ViKY® robotic endoscope holder commercialized by EndoControl, thanks to a visual servoing of the tool tip. It also allowed for the calibration of a ViKY® robot used as an endoscope holder and a second ViKY® robot used as an instrument holder, in order to control a surgical instrument, although the two robotic systems were not rigidly linked (see this link).

The method was also used to automatically move the ViKY® robotic endoscope holder commercialized by EndoControl, thanks to a visual servoing of the tool tip. It also allowed for the calibration of a ViKY® robot used as an endoscope holder and a second ViKY® robot used as an instrument holder, in order to control a surgical instrument, although the two robotic systems were not rigidly linked (see this link).

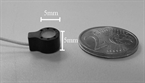

On the left, the ViKY® robotic endoscope holder commercialized by EndoControl.

On the right, video of the tracking of surgical instruments. The axis and the tip of the instruments are detected in the image (and allow for the determination of the 3D pose of the instrument). The robotic endoscope holder ViKY® and the camera are calibrated, and a visual servoing keeps the instrument's tip at the center of the laparoscopic image.

On the right, video of the tracking of surgical instruments. The axis and the tip of the instruments are detected in the image (and allow for the determination of the 3D pose of the instrument). The robotic endoscope holder ViKY® and the camera are calibrated, and a visual servoing keeps the instrument's tip at the center of the laparoscopic image.

See "beyond the visible"

During a radical prostatectomy, the surgeon wants to make the best compromise between a complete removal of the prostate gland and the preservation of the neighbouring structures. To help the surgeon in his gesture, we are studying three leads that can help him better visualize the surgical environment:

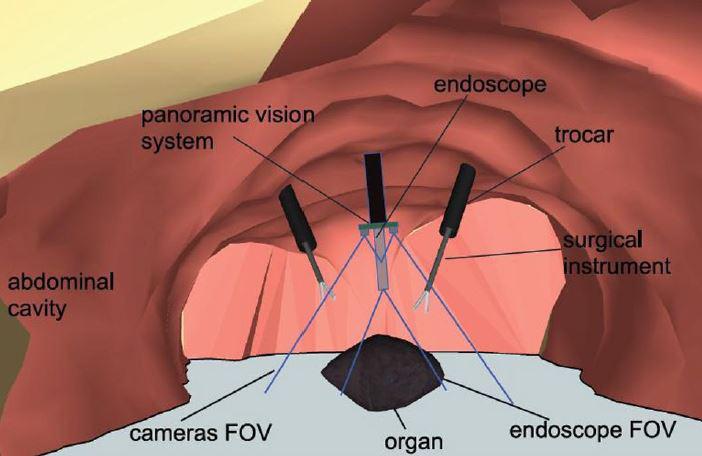

- video imaging, because the images provided by mini-CMOS cameras offer interesting perspectives to widen the surgeon's field of view compared to the laparoscopic view alone. We have developed a "global vision" system using two CMOS cameras positioned like glasses around the endoscopic trocar (see this article)

On the left, the global vision concept. On the right, the first developed prototype, with a simple deployment system.

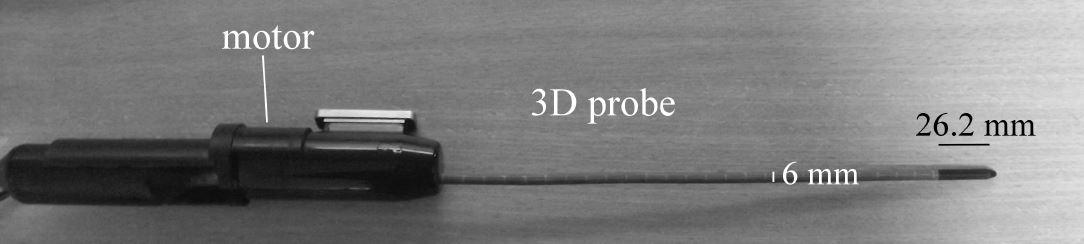

- ultrasound imaging, because it provides volumic images that could help the surgeon visualize the prostate capsul and the neurovascular bundles. We have developed (in collaboration with VERMON Company) a motorized intra-urethral ultrasound probe that images at the heart of the prostate.

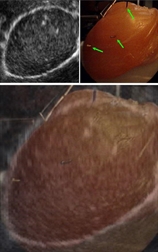

On the left, the motorized intra-urethral ultrasound probe. On the right, a sample image obtained with the Ultrasonix Ultrasound machine.

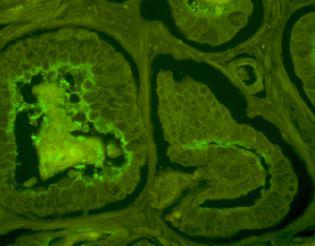

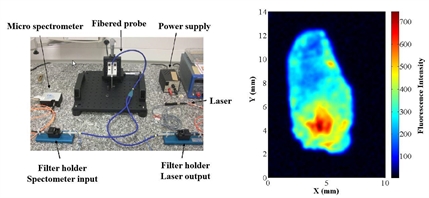

- fluorescence imaging, because it can highlight morphologic and functionnal information of the observed tissues (prostatic or non prostatic, malignant or healthy). In the frame of a collaboration, the FEMTO-ST laboratory has designed a fluorescence testbench that allows measuring the fluorescence of fresh prostate samples (gathered within a biomedical research protocol).

On the left, observation of the fluorescence of a histologic slice of prostatic tissue under a confocal microscope. On the right, the fluorescence testbench and the associated fluorescence image of a prostate chip (in color scale)

These prototypes will allow us to setup and refine our techniques. This article summarizes the results obtained at the end of the DEPORRA project, thanks to the funding of the French Research Agency through its TecSan program 2009.Ces matériels nous permettront de mettre au point nos techniques.

Image Fusion

In order for the multimodal information (provided in different world referentials) to be exploited fluidly by the surgeon, they must be fused in a single referential. We are currently studying the question of the fusion of laparoscopic and ultrasound images. The two modalities can be fused thanks to landmarks that are localized and paired in each modality. This pairing permits to compute the 3D rigid transformation between the US/Laparoscopic referentials

In the context of radical prostatectomy, the automatic detection and pairing of natural landmarks is extremely challenging. Tus, we are studying two approaches based on artificial landmarks. The first one is based on the use of surgical needles inserted in the prostate. Using this approach, we were able to perform a manual segmentation of a laparoscopic image and a transrectal ultrasound volume, on a testbench. The second approach is based on the development of "active" ultrasound markers (manufactured by VERMON). These markers can be localized precisely using our intra-urethral ultrasound probe.

In the context of radical prostatectomy, the automatic detection and pairing of natural landmarks is extremely challenging. Tus, we are studying two approaches based on artificial landmarks. The first one is based on the use of surgical needles inserted in the prostate. Using this approach, we were able to perform a manual segmentation of a laparoscopic image and a transrectal ultrasound volume, on a testbench. The second approach is based on the development of "active" ultrasound markers (manufactured by VERMON). These markers can be localized precisely using our intra-urethral ultrasound probe.

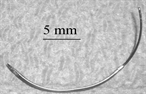

Top, a surgical needle. Bottom, an "active" ultrasound marker.

Fusion of a transrectal ultrasound volume and a laparoscopic image based on the manual localization of surgical needles.

"Surgical decision" assistance

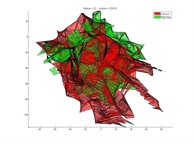

The three bricks described above are key elements to be able to interpret the surgeon's gesture (the position of his surgical instruments relatively to relevant structures, all in the same referential). This modelling could help comparing the current gesture to an "optimal" surgical procedure, thus helping junior surgeons taking the "best" surgical path (especially for the 250 first procedures). We began to address this question through a Phd (see supervision section) that was focused on the "Quantification of the quality of surgical gestures based on a-priori knowledge". Part of the work consisted in computing metrics from the real-time surgical instrument localization, in order to predict the level of expertise of volunteers (surgeons or medical students). The metrics were computed for gestures performed on a testbench (objects transfer, cutting, suturing, ...). The expertise was assessed using the Global Operative Assessment of Laparoscopic Skills (GOALS) and McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS) methodologies.

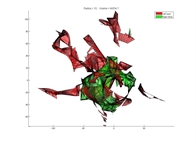

Top, the volume explored by an "expert" surgeon for a task performed on a testbench. Bottom, the volume explored by a medical student for the same task. We can note the reduced workspace for the expert.